This post is part of the F# Advent Calendar in English 2015 project. Check out all the other great posts there! And special thanks to Sergey Tihon for organizing this.

A few weeks ago, while at BuildStuff, I walked next to an image built with words, like a tag cloud picturing some company’s logo. My first reaction was: “That looks nice, but has someone been paid to manually placed all these words? I bet it can be automated…”

I started putting together a script, in order to fill an image with words. My first attempt had disastrous performance and memory characteristics. Several hours of work later (quite a few, actually), and with contributions from my co-workers, we ended-up with a much better algorithm.

F# is a wonderful language to experiment with ideas. The convenience of quickly defining types with almost no ceremony lets you focus on what you’re really trying to build. The power of the .NET ecosystem lets you use all the standard APIs that you expect to find on a serious platform. This is really enjoyable and helps you to build simple things first, and evolve to more complicated ones while staying focused.

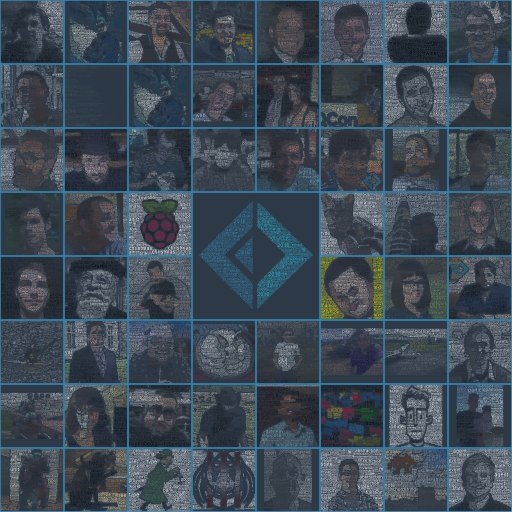

In the end we’re able to produce images such as:

In order to produce such an image, we need an image to use as the model, and a list of words to fill the shape with. We had much fun doing it, both trying to identify strategies to make the images look good and get the generation time down. You can find the full source code on Github: https://github.com/pirrmann/Wordz

The goal: build a nice picture

Another area where F# shines is related to getting data from various sources and manipulate it. This enables so many scenarios. What if we could get pictures, and related text, from some place such as https://sergeytihon.wordpress.com/2015/10/25/f-advent-calendar-in-english-2015/ for instance, and generate all sorts of images?

Step 1: parse the the posts list

I’m happy @TeaDrivenDev hasn’t published his first post idea about generating the RSS feed from the FsAdvent page, because I also have to parse the page in order to get my data… so first thing, we need to get the posts URLs from the page. It gives me a chance to mention the HTML Type Provider, which is part of the FSharp.Data library. Accessing the list of posts and downloading all the posts is as easy as:

type adventCalendarPage =

HtmlProvider<"https://sergeytihon.wordpress.com/[...]/">

type PageRow =

adventCalendarPage.FAdventCalendarInEnglish2015.Row

let postsTable =

adventCalendarPage

.GetSample()

.Tables

.``F# Advent Calendar in English 2015``

let extractPostsLinks (tr:HtmlNode) =

let lastTd = tr.Elements("td") |> Seq.last

lastTd.Elements("a")

|> List.map (fun a -> a.AttributeValue("href"))

let postLinks =

postsTable.Html.Descendants("tr")

|> Seq.tail

|> Seq.map extractPostLinks

let downloadPostsAsync links =

links

|> Seq.map HtmlDocument.AsyncLoad

|> Async.Parallel

let postsDownloads =

postLinks

|> Seq.map downloadPostsAsync

|> Async.Parallel

|> Async.RunSynchronously

let allPosts = Array.zip postsTable.Rows postsDownloads

From the previous sample, you may notice how the HTML type provider also allows you to access the elements of the page through the DOM. This is quite representative of how a good library design gracefully lets you use the underlying technology when the high-level API doesn’t suit your needs.

Step 2: extract words

We have now obtained all published FsAdvent posts in the form of HtmlDocument objects, but we don’t have their content yet… No magic type provider here, all posts are hosted on different blogging platforms, I had to use several strategies and fallbacks to identify the HTML element containing the post in each case. Semantic web is really not there yet! The search function finally looks like this:

let search =

findDivWithEntryClassUnderDivWithPostClass

|> fallback findWintellectContent

|> fallback findDivWithClassStoryContent

|> fallback findArticle

|> fallback findElementWithClassOrIdPost

|> fallback findH1TitleParentWithEnoughContent

|> fallback findScottwContent

|> fallback findMediumContent

|> fallback wholePageContent

The next step of interest is how to extract the most important words from each post. Another handy feature of F# are computation expressions, and in particular the built-in ones such as async an seq. Returning a sequence while recursively traversing a tree is as easy as:

let getMultiplier (node:HtmlNode) =

match node.Name() with

| "h1" -> 16 | "h2" -> 12 | "h3" -> 8

| "h4" -> 4 | "h5" -> 3 | "h6" -> 2

| _ -> 1

let rec collectAllWords multiplier (node:HtmlNode) = seq {

match node.Elements() with

| [] ->

let text = node.InnerText()

let words =

text.Split(delimiters)

|> Seq.filter (not << String.IsNullOrWhiteSpace)

|> Seq.filter (fun s -> s.Length > 1)

|> Seq.map (fun s -> s.ToLowerInvariant())

for word in words do

if not(filteredWords.Contains word) then

yield word, multiplier

| children ->

let multiplier' = getMultiplier node

yield! children

|> Seq.collect (collectAllWords multiplier')

}

The last step once we’ve obtained all the words from each post with is to group them and sum their weight in order to get an indicator of their importance in the post. We define a function for that:

let sumWeights words =

words

|> Seq.groupBy fst

|> Seq.map (fun (word, weights) -> word,

weights |> Seq.sumBy snd)

|> Seq.sortByDescending snd

|> Seq.take 100

|> Seq.toList

Step 3: get Twitter profile pictures

So we have all words from the FsAdvent posts published so far, and their relative importance in each post. But in order to create a visualization, we need model images, right? Let’s download the Twitter profile pictures of the authors! The profile urls can be extracted from the FsAdvent page (actually some of them are not links to twitter, so I had to cheat a bit, and this is why the function extractCleanLink is omitted…)

let extractProfileLink (tr:HtmlNode) =

let lastTd = tr.Elements("td") |> Seq.item 1

let a = lastTd.Elements("a") |> Seq.head

a.InnerText(), a.AttributeValue("href")

let profilesLinks =

postsTable.Html.Descendants("tr")

|> Seq.tail

|> Seq.map extractProfileLink

let downloadAndSavePictureAsync (name:string, twitterProfileUrl:string) = async {

let fileName = getFileName "profiles" name

let cookieContainer = new Net.CookieContainer()

let! twitterProfileString = Http.AsyncRequestString(twitterProfileUrl,

cookieContainer = cookieContainer)

let twitterProfile = HtmlDocument.Parse(twitterProfileString)

let profileImageLink =

twitterProfile.Descendants("a")

|> Seq.find(fun a -> a.HasClass("ProfileAvatar-container"))

let profilePictureUrl = profileImageLink.AttributeValue("href")

let! imageStream = Http.AsyncRequestStream profilePictureUrl

use image = Image.FromStream(imageStream.ResponseStream)

image.Save(fileName, Imaging.ImageFormat.Png)

return () }

profilesLinks

|> Seq.map (extractCleanLink >> downloadAndSavePictureAsync)

|> Async.Parallel

|> Async.RunSynchronously

|> ignore

Step 4: cut the profile pictures into layers

My early experiments with this did not have any feature to cut pictures into layers, but I didn’t want to manually edit each picture profile! So what I’ve done to build “layers” is just to cluster pixels by color similarity (actually I’ve tried to really cluster them with a K-means cluster but I ended up using my custom clustering with magic numbers, as it performs better on that specific set of pictures).

This topic could be a blog post of its own so I’ll just sum up the steps I’ve performed:

- In order to get rid of outliers, first blur the image

- then generate color clusters using the following algorithm:

- considering each pixel, if it’s close enough (below a given threshold) to an existing cluster, put it in the cluster. If too far from any existing cluster, create a new cluster with the single pixel in it.

- after having seen each pixel, merge clusters that are close enough

- finally, merge clusters that are too small with their nearest neighbour, regardless of the distance

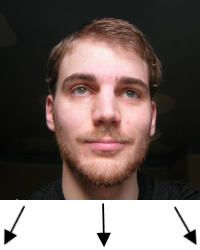

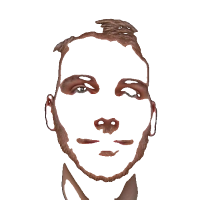

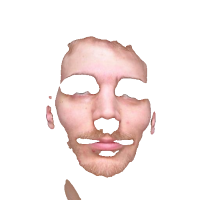

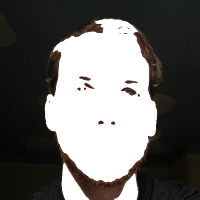

This way, we can go from one image to several ones with the grouped pixels:

Step 5: fill images with words

As some authors have decided to blog more that once, I’ve decided to merge their posts and generate a single image per author.

let mergePosts posts =

let allImportantWords =

posts

|> Seq.collect (fun post -> post.Words)

|> Seq.toList

{ Seq.head posts with Words = sumWeights allImportantWords }

let mergedPosts =

parsePosts allPosts

|> Seq.groupBy (fun post -> post.Author)

|> Seq.map(fun (_, posts) -> mergePosts posts)

|> Seq.toArray

Using sumWeights to merge posts is like considering that each post is a paragraph containing only its 100 most important words.

I then consider each layer generated previously from the Twitter profile picture of an author, and fill it with the most important words from his/her posts. This generates one file per layer on the disk.

Step 6: generate the calendar!

So… 62 posts (before the last one) – 2 which were not published – 1 because I decided to group Steffen’s posts (even if scheduled on 2 different dates) + 1 because Steffen’s post have been unmerged (as I neeeded 60 posts in the end) = 60 posts (yeah!). This gives me a nice 8 * 8 square, with a 2 * 2 tile in the middle for the F# foundation! All is needed is to iterate on the generates layers filled with words, and place them on the calendar. I’ve randomly shuffled the posts list, can you identify everybody? Here is the result, enjoy!

(click on the calendar to view the full size image)

(click on the calendar to view the full size image)

I’ve just updated this post with all posts written so far…

Pingback: F# Advent Calendar in English 2015 | Sergey Tihon's Blog

Pingback: F# Weekly #1, 2016 – New Year Edition | Sergey Tihon's Blog

Pingback: The week in .NET - 1/5/2015 - .NET Blog - Site Home - MSDN Blogs

Pingback: 주간닷넷 2016년 1월 5일 - Korea Evangelist - Site Home - MSDN Blogs

Pingback: The week in .NET - 1/12/2015 - .NET Blog - Site Home - MSDN Blogs

Pingback: MSDN Blogs